34 Empirical Model

Empirical model refers to a model constructed based on real-world data, either observational or experimental. At times this term is used in contrast with a theoretical model, a model constructed based on realistic simulations that are difficult, dangerous, or morally unacceptable to be carried out, or resources required to conduct an experiment is incredibly immense.

In this section, we begin with an elementary-level model fitting. Model-fitting is inherently regressive. That is, contrary to the usual procedure to draw a graph based on the equation we have by plugging in a few values in the domain, we are trying to find a pattern, or an equation, between the variables of interest, that best-fit the given data with least errors.

Let us begin our exploration by constructing a simple one-term model with the given hypothetical dataset.

![]()

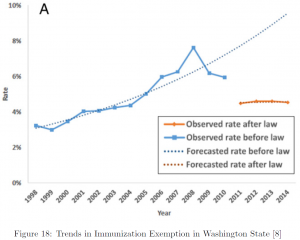

First, let us try to find a pattern with a scatter plot (Figure 16). The dotted lines represent a best-fitting trendline generated by Microsoft Excel. Panel A fit a linear model, and Panel B fit a second order polynomial. While it appears the straight line provides a good fit over the given data, the fitting with a polynomial seems to have a better fit. Therefore, let us proceed with the polynomial model.

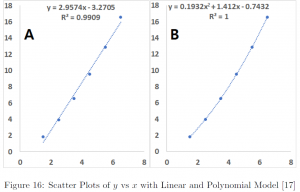

However, it is conventional to fit a model with a straight line. One of the main reasons is the ease with the comparison of errors. By comparing errors, we can determine the superiority of one model over others, however it is not easy to compare the errors over models of different order, e.g. linear vs polynomial, or linear vs non-linear. Therefore, we linearize the data either ![]() or

or ![]() singularly, or both, by transformation. In our example, we can compare which provides a better fit, linear model vs second order polynomial, by fitting

singularly, or both, by transformation. In our example, we can compare which provides a better fit, linear model vs second order polynomial, by fitting ![]() vs

vs ![]() .

.

Note that in Figure 17, it ![]() should be read as

should be read as ![]() , and therefore, the regressed line is

, and therefore, the regressed line is

![]()

Therefore,

![]()

In the fitted models above, we see ![]() , all of which close to 1, except in panel B equal to 1. What does this

, all of which close to 1, except in panel B equal to 1. What does this ![]() denote?

denote?

In short, ![]() represents how well the model we have explains the given data. However, to formally define

represents how well the model we have explains the given data. However, to formally define ![]() , we first need to go over a few important terms related to a model-fitting. While there are many criteria to fit a model, one standard way is using a least-squares method, and determine models with smaller errors a better one. There are 3 kinds of errors as follows:

, we first need to go over a few important terms related to a model-fitting. While there are many criteria to fit a model, one standard way is using a least-squares method, and determine models with smaller errors a better one. There are 3 kinds of errors as follows:

1. Sum of Squares Total (SST) is a measure of the total variation in the dataset, defined as the sum of squares of the difference between each observation and the mean.

![]()

2. Sum of Squares Due to Regression (SSR) is a measure of variation explained by the model, defined as the sum of squares of the difference between each predicted value and the mean.

![]()

3. Sum of Squared Errors (SSE) is a measure of variation not explained by the model, defined as the sum of squares of the difference between each observation and the predicted value.

![]()

Naturally, it follows the more variation explained by the model, the better the model. In other words, the higher the ![]() value, defined as the proportion of SSR over SST, the model has a good fit.

value, defined as the proportion of SSR over SST, the model has a good fit.

![]()

Therefore, we can see a slight improvement in ![]() from 0.9909 to 0.9974 by fitting

from 0.9909 to 0.9974 by fitting ![]() vs

vs ![]() over

over ![]() vs

vs ![]() . Note that the

. Note that the ![]() of panel B is equal to 1. This means the observed data perfectly line up on the fitted model. In a real-world situation, this is highly unlikely and once this happens many would suspect the data actually have been cooked, i.e. manipulated, than to be authentic.

of panel B is equal to 1. This means the observed data perfectly line up on the fitted model. In a real-world situation, this is highly unlikely and once this happens many would suspect the data actually have been cooked, i.e. manipulated, than to be authentic.

Now, we introduce the notion of power of ladder, the hierarchy of data transformation.

Power of Ladder

![Rendered by QuickLaTeX.com \[\begin{array}{c|c|c} \text{Power} & \text{Transformation} & \text{Name} \\ \hline \vdots & \vdots & \vdots \\ 3 & y^3 & \text{Cubic} \\ 2 & y^2 & \text{Square} \\ 1 & y & \text{Original} \\ \frac{1}{2} & \sqrt{y} & \text{Square root} \\ 0 & \log_{10}y \text{ or } \ln y & \text{Logarithm} \\ -\frac{1}{2} & -\frac{1}{2} & \text{Reciprocal root} \\ -1 & -\frac{1}{y} & \text{Reciprocal} \\ -2 & -\frac{1}{y^2} & \text{Reciprocal square} \\ -3 & -\frac{1}{y^3} & \text{Reciprocal cubic} \\ \vdots & \vdots & \vdots \end{array} \]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-558aa06a7a0e681ef044fcdc9435762c_l3.png)

One commonly used criteria to determine how to transform the data is ![]() , the proportion of variation explained by the model. As such, the higher and the more close to 1, the better. However, there is a subtlety involved, where the sound judgement of a modeller is needed. Rethinking our example, by transforming

, the proportion of variation explained by the model. As such, the higher and the more close to 1, the better. However, there is a subtlety involved, where the sound judgement of a modeller is needed. Rethinking our example, by transforming ![]() into

into ![]() , indeed, we achieved an improvement in a model fit with an increase in

, indeed, we achieved an improvement in a model fit with an increase in ![]() from 0.9909 to 0.9974, at least in a technical sense. However, from a practical point of view, our

from 0.9909 to 0.9974, at least in a technical sense. However, from a practical point of view, our ![]() was already almost 100% with over 99% of variability explained by the model. Would you assess 0.65% improvement meaningful?

was already almost 100% with over 99% of variability explained by the model. Would you assess 0.65% improvement meaningful?

That is a matter of judgement, not a matter of fact. While in general one might not really be able to make a convincing or persuasive argument the 0.65% improvement in ![]() from 0.9909 to 0.9974 is significant, there are instances such rigor is required, for example, in establishing any law of physics. However, most of the time when data transformation is deemed necessary, we gain a meaningful improvement in

from 0.9909 to 0.9974 is significant, there are instances such rigor is required, for example, in establishing any law of physics. However, most of the time when data transformation is deemed necessary, we gain a meaningful improvement in ![]() , for example, from 0.4 to 0.7 or so.

, for example, from 0.4 to 0.7 or so.

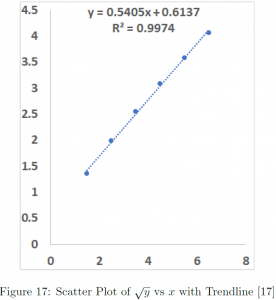

We conclude this section introducing a real-world example [8].

Exemptions from Mandatory Immunization after Legally Mandated Parental Counseling} [8]

The rapid increase in vaccine refusal or exemption at an alarming rate was a concern for health care professionals and the government. To tackle the issue, in 2011, the Washington State implemented implemented a senate bill to require counseling and a signed form from a health care professional for school entrants to be exempt from mandatory immunization.

In 2018, the evaluation of the impact of the legislation was published in the medical journal Pediatrics [8] with a great reduction in the exemption rates as well as the steep slope of increase turned to a plateau.

The modeling technique used in this research is interrupted time series, a variant of a linear model commonly used to evaluate a policy change, with a following regression equation:

![]()

where ![]() is time in years,

is time in years, ![]() is a binary variable denoting policy implementation, and

is a binary variable denoting policy implementation, and ![]() adjusts for possible interaction between the two variables.

adjusts for possible interaction between the two variables.