31 Interpolation and Polynomial Approximation

In this section, we discuss methods to approximate a function with polynomials, and estimating the value of the function where its value is unknown. Estimating the value between the given, i.e. known, data points is called interpolation, and outside, the value of unknown points, is called extrapolation. Indeed, interpolation is less-prone to error, and likely error will be smaller near the known data points. As such, we need to exercise caution in extrapolation, as the behavior of fitted curve or approximating polynomial may be far off from the real function.

Also, the function of interest may or may not be known. When the function is known, our goal would be obtaining reasonably accurate estimates with much less burden of computation. One famous example, though not applicable to every function, is the Taylor expansion. Note the motivation of developing Taylor expansion was to express differentiable non-polynomial functions as a polynomial.

A familiar example of approximating an unknown function would be interpolation and extrapolation with census data. Due to its expensive resource consumption, census is not conducted annually but at several years of interval. In fact, while usually is the case the census bureau interpolates population of intercensal period with an exponential function, for illustration purpose, here we shall estimate with a polynomial.

We begin this section by introducing the method, the Lagrange interpolating polynomial.

Interpolation and the Lagrange Polynomial

The ![]() -th Lagrange interpolating polynomial is the formula to construct a unique polynomial connecting

-th Lagrange interpolating polynomial is the formula to construct a unique polynomial connecting ![]() distinct points.

distinct points.

Theorem. (![]() -th Lagrange Interpolating Polynomial [7])

-th Lagrange Interpolating Polynomial [7])

Given ![]() for

for ![]() , the

, the ![]() -th Lagrange interpolating polynomial

-th Lagrange interpolating polynomial ![]() is a unique polynomial of at most degree

is a unique polynomial of at most degree ![]() satisfying

satisfying ![]() given by

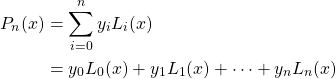

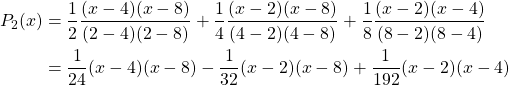

given by

where

Let us illustrate with an example.

Example. Estimating U.S. Population in 2020

Following are the historical U.S. population data from the U.S. Census Bureau [1].

![Rendered by QuickLaTeX.com \[\begin{array}{c|c} \text{Year} & \text{Population in Millions} \\ \hline 1990 & 249 \\ 2000 & 281 \\ 2010 & 309 \end{array}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-6bc49ed26e1e775d866d615203754d84_l3.png)

Using the Lagrange interpolating method, estimate the U.S. population in 2005 and 2020.

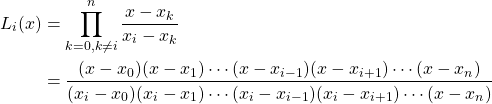

We have 3 data points. Therefore, the Lagrange polynomial, where ![]() is year, will be of a second order as follows:

is year, will be of a second order as follows:

Therefore,

![]()

Therefore, the estimated U.S. population in 2005 and in 2020 are approximately 296 and 333 millions, respectively.

Now, let us have a look at another example of a known function.

Example. Construct a Lagrange interpolating polynomial ![]() of

of ![]() with the nodes

with the nodes ![]() , and examine the accuracy of

, and examine the accuracy of ![]() and

and ![]() .

.

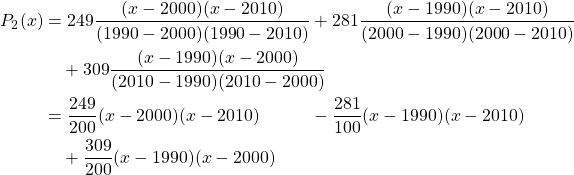

Let us construct the Lagrange polynomial first. As we have 3 data points, ![]() ,

, ![]() , and

, and ![]() , the Lagrange polynomial will be in the second order as follows:

, the Lagrange polynomial will be in the second order as follows:

Therefore,

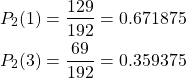

Therefore, errors are

Unlike the previous example of estimating U.S. population, we observe errors in our estimates. Note that ![]() . In fact, the magnitude of error is much larger at

. In fact, the magnitude of error is much larger at ![]() than at

than at ![]() . This is within our expectation that the farther away the interpolating or extrapolating points are located from the data points we know, the more error there likely is.

. This is within our expectation that the farther away the interpolating or extrapolating points are located from the data points we know, the more error there likely is.

Error Analysis of Lagrange Polynomial

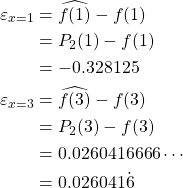

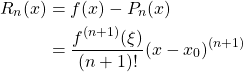

The remainder term for Lagrange polynomial is a derivation of the truncation error of Taylor expansion. First, let us restate the Taylor polynomial as follows:

where ![]() denotes the

denotes the ![]() -th order Taylor polynomial.

-th order Taylor polynomial.

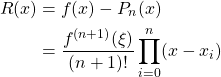

The Lagrange polynomial uses information from ![]() different points rather than a single point

different points rather than a single point ![]() in Taylor expansion. Therefore, we obtain the remainder term of Lagrange polynomial by replacing

in Taylor expansion. Therefore, we obtain the remainder term of Lagrange polynomial by replacing ![]() with

with ![]() as follows:

as follows:

The truncation error ![]() of

of ![]() -th order Lagrange polynomial is

-th order Lagrange polynomial is

where ![]() denotes the

denotes the ![]() -th order Lagrange polynomial.

-th order Lagrange polynomial.

Let us illustrate with an example.

Example. Determine the maximum possible error, i.e. error bound, of the previous example.

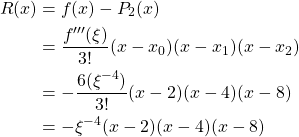

The truncation error ![]() of the 2nd order Lagrange polynomial is

of the 2nd order Lagrange polynomial is

Then, for ![]() ,

,

![]()

Therefore,

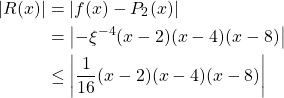

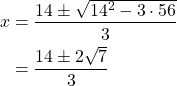

Let ![]() .

.

Then, ![]() .

.

Then, ![]() has a local minimum and maximum at

has a local minimum and maximum at

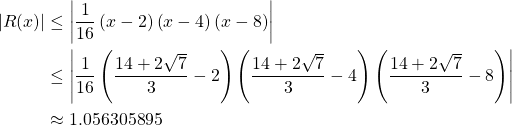

Therefore, the max of ![]() on

on ![]() is at

is at ![]() .

.

Therefore, for ![]() ,

,

Therefore, the maximum error of ![]() for

for ![]() on

on ![]() is approximately 1.06.

is approximately 1.06.

Divided Difference

We have explored a little bit of interpolating polynomials. However, the downside of constructing a Lagrange polynomial is it is computationally expensive. That is, constructing a second order polynomial with only 3 data points was already bulky, and as reflected in the formula of Lagrange interpolating polynomial, the computation required increases exponentially as we have more data points. Therefore, another method requiring less calculation, or more efficient mechanism, was developed by Newton, and is called Newton’s divided-difference formula or Newton’s interpolating polynomial.

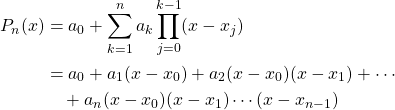

Theorem. (Newton’s Interpolating Polynomial)

The ![]() -th Lagrange interpolating polynomial

-th Lagrange interpolating polynomial ![]() penetrating

penetrating ![]() of a continuous function

of a continuous function ![]() for

for ![]() is unique, but also can be expressed in an alternative form, Newton’s interpolating polynomial as follows:

is unique, but also can be expressed in an alternative form, Newton’s interpolating polynomial as follows:

where ![]() and

and ![]() denotes the

denotes the ![]() -th divided difference of

-th divided difference of ![]() defined as

defined as

![]()

Proof.

First, write a ![]() -th polynomial

-th polynomial ![]() as follows:

as follows:

![]()

Then, determine the coefficient ![]() ‘s where

‘s where ![]() .

.

![]()

![]() , since

, since ![]()

![]() , since

, since ![]()

![]()

![]()

Next, introduce the divided difference notation.

The 0th divided difference of ![]() is

is

![]()

The 1st divided difference of ![]() is

is

![]()

The 2nd divided difference of ![]() is

is

![]()

![]()

The ![]() -th divided difference of

-th divided difference of ![]() is

is

![]()

By letting ![]() and

and ![]() , we have the

, we have the ![]() -th divided difference of

-th divided difference of ![]() as follows:

as follows:

![]()

Then, we have

![Rendered by QuickLaTeX.com \begin{align*} a_0 &= f[x_0]\\ a_1 &= f[x_0,x_1]\\ &\vdots\\ a_n &= f[x_0,x_{1},\cdots,x_{n}] \end{align*}](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-b88ed44f2cc695d87d8829003cb1c95c_l3.png)

Finally, express the coefficients ![]() ‘s in divided difference notation

‘s in divided difference notation

![Rendered by QuickLaTeX.com \begin{align*} P_n(x) &= f[x_0]+\sum^n_{k=1}f[x_0,x_{1},\cdots,x_{k}]\prod^{k-1}_{j=0}(x-x_j)\\ &= f[x_0]+f[x_0,x_1](x-x_0)+f[x_0,x_1,x_2](x-x_0)(x-x_1)+\cdots\\ &\quad +f[x_0,x_{1},\cdots,x_n](x-x_0)(x-x_1)\cdots(x-x_{n-1}) \end{align*}](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-e2f101f39992122f725193092a2501ca_l3.png)

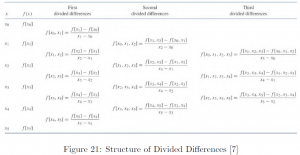

The following table shows the generation of divided differences. Note that the order of inputs, i.e. ![]() ‘s are irrelevant.

‘s are irrelevant.

Let us conclude this section with an illustrative example.

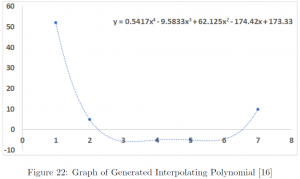

Example. Find the Newton’s interpolating polynomial of the following dataset.

![]()

First, we construct a divided difference table.

![Rendered by QuickLaTeX.com \[\begin{array}{cc|cccc} x &y &d_1 &d_2 &d_3 &d_4\\ \hline 1 &52 &-47.0000 &14.0000 &-3.0833 &0.541666667\\ 2 &5 &-5.0000 &1.6667 &0.1667\\ 4 &-5 &0.0000 &2.5000\\ 5 &-5 &7.5000\\ 7 &10\\ \end{array}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-78d83eba78092b922fcb85a1ebe4ca82_l3.png)

Detailed calculations in the following steps are overly complex to be carried out by hand, and thus omitted. Also, note that the aim of this summary is to understand important concepts and theorems, and know how to apply those. The author recommends the use of a computer package such as Python, Wolfram Mathematica, SAS, R, and Stata. Following is the result with a graph generated using Microsoft Excel 2020.

Cubic Spline Interpolation

Computational challenge of constructing a high-order Lagrange interpolating polynomial has been substantially reduced with the introduction of Newton’s method using divided difference, a method of sequentially adding information than to use all data points all at once.

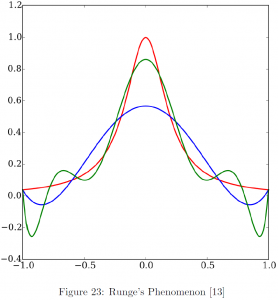

However, another problem occurs called Runge’s phenomenon, the problem of oscillation at the edges of fitted polynomial, especially when fitting a polynomial with equally spaced nodes (Figure 23). The red curve in the figure denotes the Runge’s function, the blue curve is a polynomial fitted with 5 data points, and the green curve fitted with 9 data points. It is contrary to the general trend of increasing accuracy with more data points, but the oscillations at the edges result in substantial increase in errors. To resolve this issue, in this section we introduce piecewise interpolation.

The simplest form would be a piecewise linear function, simply “connecting the dots.” However, we omit this case, a series of straight lines, as this is a rudimentary form that is only continuous, but highly unlikely to be differentiable at the nodes. An advanced form is a piecewise-quadratic function. Constructing a series of second order polynomial connecting the points, so that the tangent evaluated at the nodes would agree in adjacent functions. Let us have a look at the simplest example with only 3 nodes.

Let ![]() be the nodes for

be the nodes for ![]() . Then, to fit a piecewise-quadratic function, we need to construct 2 polynomials,

. Then, to fit a piecewise-quadratic function, we need to construct 2 polynomials, ![]() and

and ![]() , satisfying the following conditions:

, satisfying the following conditions:

![Rendered by QuickLaTeX.com \[\begin{cases} Q_0(x_0) = y_0\\ Q_0(x_1) = y_1 = Q_1(x_1)\\ Q_1(x_2) = y_2\\ Q'_0(x_1) =Q'_1(x_1) \end{cases}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-fd3f69849a2ccc068cea4ce2dc7e00ac_l3.png)

Note that there may be many polynomials satisfying the conditions above, i.e. the polynomial is not uniquely determined. Therefore, in general, an additional condition is given such as the derivative at one of the endpoints. However, the difficulty is we usually do not have enough information on the derivatives on the endpoints.

Another way, in fact the most commonly used piecewise polynomial, is the piecewise-cubic or cubic spline method, fitting cubic functions connecting specified nodes with a series of cubic function. The main advantage of this method is it may provide a smoother function by requiring the same concavity at each connecting node, yet at the cost of increased computations. Following is the general form of a cubic spline:

Let ![]() be nodes for the cubic spline for

be nodes for the cubic spline for ![]() and denote each cubic polynomial as

and denote each cubic polynomial as ![]() for

for ![]() , satisfying

, satisfying

![Rendered by QuickLaTeX.com \[\begin{cases} S_j(x_j) &= y_j\\ S_{j+1}(x_{j+1}) &= y_{j+1}\\ S_{j}(x_{j+1}) &= S_{j+1}(x_{j+1})\\ S'_{j}(x_{j+1}) &= S'_{j+1}(x_{j+1})\\ S''_{j}(x_{j+1}) &= S''_{j+1}(x_{j+1})\\ \end{cases}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-dd83dd33a712e453e14b7033c5271c6c_l3.png)

and one of

![Rendered by QuickLaTeX.com \[\begin{cases} S''(x_0)=S''(x_n)=0 &\text{natural or free boundary}\\ S'(x_0)=f'(x_0) \text{ and } S'(x_n)=f'(x_n) &\text{clamped boundary} \end{cases}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-8425695ff44edd3da9f4c6f2898488c9_l3.png)

Let us conclude this section with an illustrative example.

Example. Construct a clamped cubic spline of ![]() interpolating

interpolating ![]() ,

, ![]() , and

, and ![]() , where

, where ![]() and

and ![]() .

.

Let ![]() for

for ![]() .

.

Then, we have

![Rendered by QuickLaTeX.com \[\begin{cases} S'_j(x) &= b_j+2c_jx +3d_jx^2\\ S''_j(x) &= 2c_j +6d_jx \end{cases}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-13fb0ddb29909d124312c5e3d7068949_l3.png)

We need to find polynomials ![]() and

and ![]() satisfying

satisfying

![Rendered by QuickLaTeX.com \[\begin{cases} S_0(x_0) &= y_0\\ S_0(x_{1}) &= y_{1}\\ S_1(x_1) &= y_1\\ S_{1}(x_{2}) &= y_{2}\\ S'_{0}(x_{0}) &= y'_0\\ S'_{1}(x_{2}) &= y'_2\\ S'_{0}(x_{1}) &= S'_{1}(x_{1})\\ S''_{0}(x_{1}) &= S''_{1}(x_{1})\\ \end{cases}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-67c57ee79b2103a2c2faadb2d6be3a92_l3.png)

Solving the linear system above, the yielded cubic polynomials are

![Rendered by QuickLaTeX.com \[\begin{cases} S_0(x)= 2+2(x-1)-2.5(x-1)^2+1.5(x-1)^3 &1\leq x\leq 2\\ S_1(x)= 3+1.5(x-2)+2(x-2)^2-1.5(x-2)^3 &2\leq x\leq 3 \end{cases}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-e9ad6815151d3e772905299dcd3f1c1a_l3.png)

Note that actual calculations were omitted. As the number of nodes increases, the required amount of computations involved rapidly increases accordingly. As such, sound judgement is required for the “tradeoff” between fitting a cubic spline vs a ![]() -th order interpolating polynomial.

-th order interpolating polynomial.