32 Numerical Calculus

This section deals with numerical calculus, i.e. techniques of numerical differentiation and numerical integration. In other words, there are situations where a given function of interest is continuous yet non-differentiable, or differentiable yet the computations involved are overly bulky in the light of the precision we need.

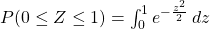

Likewise, the function of interest may not be integrable in terms of elementary functions. One familiar example is the probability density function of a normal distribution function whose antiderivative cannot be explicitly expressed in terms of elementary functions. Or again, the computations required for integration are too expensive not worth allotting the resources we have. One leeway is to integrate utilizing its Taylor expansion, however not all functions are Taylor-expandable.

In the cases mentioned above or in any case where direct integration or differentiation is not feasible, we can attempt to obtain an estimate with the techniques of numerical calculus.

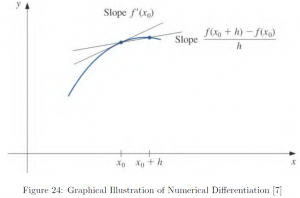

In this summary, we introduce the derivation of first derivative formulae based on 2 points and equally spaced 3 points. Depending on the location of the point whose tangent is estimated among the points given, the formula is called forward- or midpoint-, or backward-difference formula.

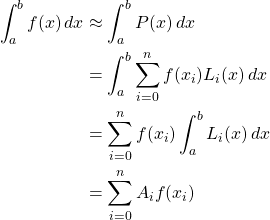

Per numerical integration, we introduce the Newton-Cotes formula, approximating with the ![]() -th order Lagrange interpolating polynomial, i.e. segment the interval of the integrand into equally spaced nodes; compute Lagrange interpolating polynomial of connecting adjacent nodes; integrate each segment and summate. Therefore,

-th order Lagrange interpolating polynomial, i.e. segment the interval of the integrand into equally spaced nodes; compute Lagrange interpolating polynomial of connecting adjacent nodes; integrate each segment and summate. Therefore,

- when

, the zeroth order Lagrange polynomial is constant, and thus it will be a summation of rectangles;

, the zeroth order Lagrange polynomial is constant, and thus it will be a summation of rectangles; - when

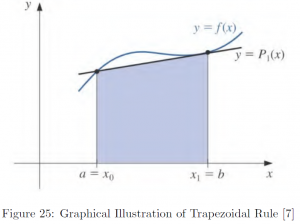

, the first order Lagrange polynomial is a straight line, and thus it will be a summation of trapezoids;

, the first order Lagrange polynomial is a straight line, and thus it will be a summation of trapezoids; - when

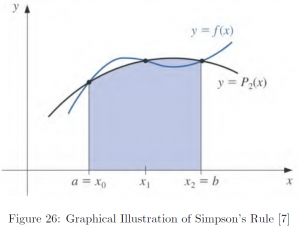

, the second order Lagrange polynomial is a parabolic curve, and the resulting summation formula involving cubic terms is called the Simpson’s rule.

, the second order Lagrange polynomial is a parabolic curve, and the resulting summation formula involving cubic terms is called the Simpson’s rule.

Then, we conclude this section with a brief introduction of other methods of numerical integration such as undetermined coefficients and composite numerical integration.

Numerical Differentiation

To begin with, let us first revisit the definition of a limit.

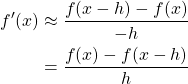

![]()

Therefore, for small ![]() , it follows

, it follows

![]()

This is our forward-difference formula. By substituting ![]() with

with ![]() , we obtain the backward-difference formula as follows:

, we obtain the backward-difference formula as follows:

Truncation errors can be obtained from the Taylor’s theorem. For ![]() , there exists

, there exists ![]() such that

such that

![]()

i.e.

![]()

Likewise,

![]()

Therefore, the truncation errors for the forward difference-formula and the backward-difference formula are ![]() and

and ![]() , respectively.

, respectively.

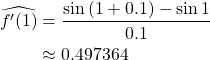

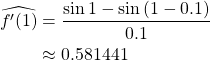

Let us illustrate with an example.

Example. Compare the estimates of ![]() with

with ![]() , obtained by 2-point forward- and backward-difference formulae.

, obtained by 2-point forward- and backward-difference formulae.

Let ![]() .

.

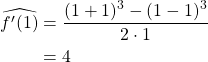

Then, ![]()

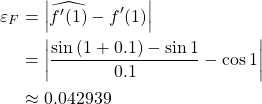

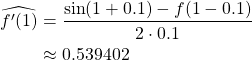

1. Forward-difference estimate

2. Backward-difference estimate

3. Compare 2 estimates

First, let us compute actual ![]() .

.

![]()

Therefore, errors are

(a) With forward-difference estimate

(b) With backward-difference estimate

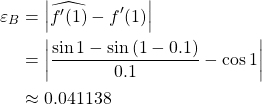

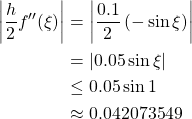

4. Check error bounds

(a) Of forward-difference estimate

The actual error is within the error bound

![]()

(b) Of backward-difference estimate

The actual error is within the error bound

![]()

We can see both estimates, forward and backward, are similarly off by about 0.04, roughly less than 10% of the actual value of ![]() .

.

The 3-point formulae and the truncation term of each can also be obtained by algebraic manipulation of Taylor expansion and Taylor theorem of a function differentiable for at least thrice. We introduce the result as follows:

1. 3-point Midpoint Formula

![]()

where

![]()

2. 3-point Forward Endpoint Formula

![]()

where

![]()

3. 3-point Backward Endpoint Formula

![]()

where

![]()

Note the more accurate estimate, with half of the error bound of the other two, would be obtained with the midpoint formula. Let us examine the accuracy of these formulae by comparing with the estimates obtained by 2-point formulae.

Example. Compare the estimates of ![]() with

with ![]() , obtained by 3-point midpoint, forward endpoint, and backward endpoint formulae. Also, discuss the accuracy of these estimates by comparing with the results from 2-point formulae in the previous example.

, obtained by 3-point midpoint, forward endpoint, and backward endpoint formulae. Also, discuss the accuracy of these estimates by comparing with the results from 2-point formulae in the previous example.

Let ![]() .

.

Then, ![]()

1. 3-point midpoint estimate

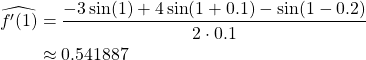

2. 3-point forward endpoint estimate

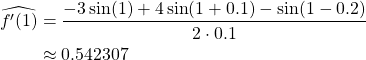

3. 3-point backward endpoint estimate

4. Error computing

We know from our previous example

![]()

Therefore, errors are

(a) With midpoint estimate

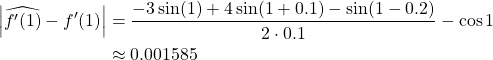

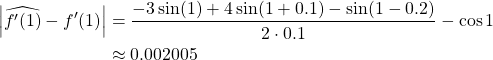

(b) With forward endpoint estimate

(c) With backward endpoint estimate

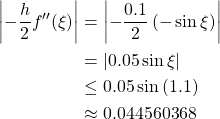

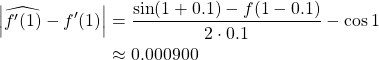

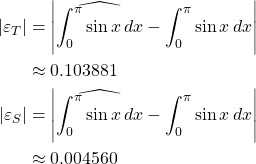

1. Comparison of errors

![Rendered by QuickLaTeX.com \[\begin{array}{c|cc} \text{Method} & \widehat{f'(1)} & \text{Error} \\ \hline \text{2-Point Forward-Difference} & 0.497364 & 0.042939 \\ \text{2-Point Backward-Difference} & 0.581441 & 0.041138 \\ \text{3-Point Midpoint} & 0.539402 & 0.000900 \\ \text{3-Point Forward Endpoint} & 0.541887 & 0.001585 \\ \text{3-Point Backward Endpoint} & 0.542307 & 0.002005 \end{array}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-1c6dcd8b5f67ac75ab822dcac28593df_l3.png)

We can see, on average, the estimates from 3-point formulae are much more accurate than 2-point formulae-based estimates with substantially smaller errors. Indeed, this does not come as a surprise in the light of the notion “the more information we have (about the function), the more accurate, i.e. closer to truth, estimate we can yield.”

However, note that our result is based on the assumption ![]() is small. Suppose we are estimating the tangent of the polynomial

is small. Suppose we are estimating the tangent of the polynomial ![]() at

at ![]() with

with ![]() . Then, the estimates we would have obtained are:

. Then, the estimates we would have obtained are:

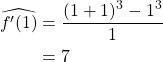

(a) 2-point forward-difference

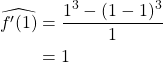

(b) 2-point backward-difference

(c) 3-point midpoint estimate

We can clearly see that all of the estimates above are grossly discordant with the truth value

![]()

Numerical Integration

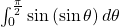

Now we turn our attention to numerical integration. As mentioned in the introduction, there are, in fact, quite many instances, where yielding an explicit form of antiderivative is not possible, or obtaining the integral of a given function with elementary techniques, such as substitution and integration by parts, so far we have covered. Following are such instances:

, where

, where

In the cases like above, we attempt to approximate the given function with the Lagrange polynomial. Then, it becomes a question to construct a polynomial of what order of the given interval with how many nodes. The process is quite similar to computing the Riemann sum, yet the difference is we do not take the number of slices of the interval, denoted ![]() , to infinity as done in calculus, but only limit to a certain number, where our computation power allows.

, to infinity as done in calculus, but only limit to a certain number, where our computation power allows.

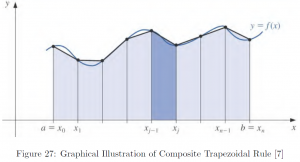

The interpolating polynomial can take the shape of constant, straight line, or parabolic curve, corresponding to the zeroth, first, and second order polynomials. The resultant area under the polynomial is a rectangle, a trapezoid, and an area under a parabolic curve, the organized formula of each is called a rectangular rule, trapezoidal rule, and Simpson’s rule. Note that in the case of the zeroth polynomial, in fact is identical to left Riemann sum.

Let us introduce the Newton-Cotes Formula, the basic form of Lagrange polynomial-based numerical integration.

Theorem. (Newton-Cotes Formula)

Let ![]() be the

be the ![]() -th order Lagrange polynomial defined as

-th order Lagrange polynomial defined as

![Rendered by QuickLaTeX.com \[ P_n(x) = \sum^n_{i=0}f(x_i)L_i(x) \text{, where } L_i(x) = \prod^n_{k=0,k\neq i}\frac{x-x_k}{x_i-x_k}\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-8d83a25a3cd1467fb2d5e1df869bb28b_l3.png)

Then,

where ![]() .

.

Let us consider 3 special cases as follows:

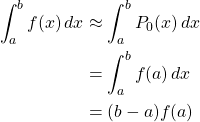

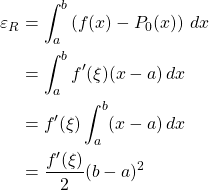

1. Rectangular Rule, where ![]()

Then,

And the truncation error ![]() is, for

is, for ![]() ,

,

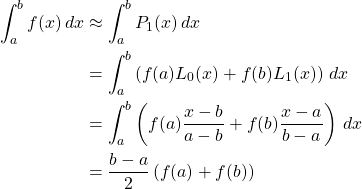

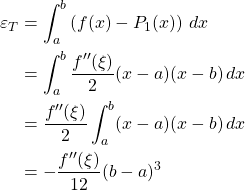

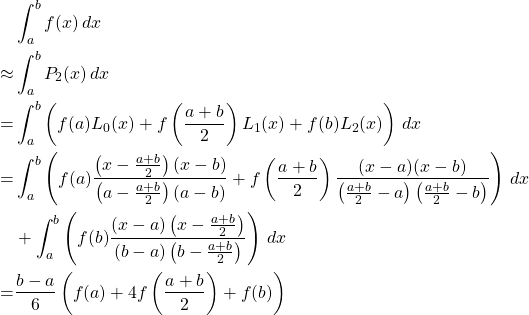

2. Trapezoidal Rule, where ![]()

Then,

And the truncation error ![]() is, for

is, for ![]() ,

,

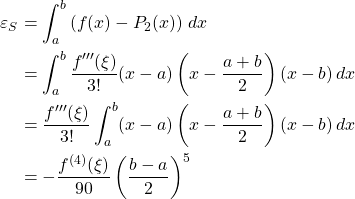

3. Simpson’s Rule, where ![]()

Then,

And the truncation error ![]() is, for

is, for ![]() ,

,

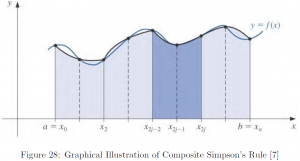

However, rarely is the case we apply the numerical integration rules with only minimal partitions. Suppose we divide the given interval ![]() into

into ![]() equally spaced subintervals. Then, we can label the new nodes as

equally spaced subintervals. Then, we can label the new nodes as

![]()

for ![]() . Then, by applying the trapezoidal or Simpson’s rule over each subinterval and summing them up, they become composite trapezoidal rule and Simpson’s rule, respectively, as follows:

. Then, by applying the trapezoidal or Simpson’s rule over each subinterval and summing them up, they become composite trapezoidal rule and Simpson’s rule, respectively, as follows:

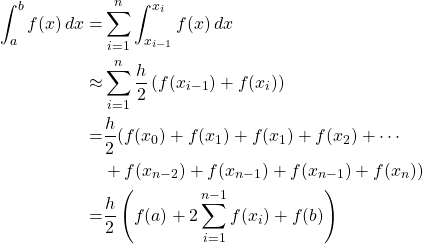

* Composite Trapezoidal Rule

![]()

Derivation is as follows:

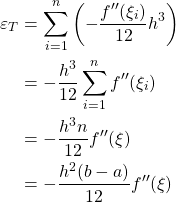

The truncation error ![]() for

for ![]() is

is

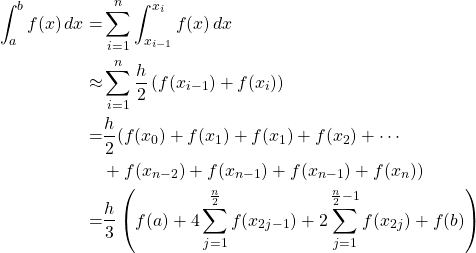

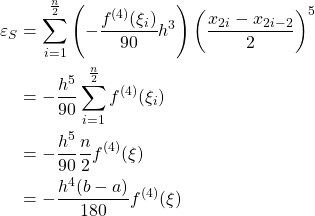

* Composite Simpson’s Rule

![Rendered by QuickLaTeX.com \[ \int_a^b f(x)\,dx \approx \frac{h}{3} \left( f(a) +4\sum^{\frac{n}{2}}_{j=1}f(x_{2j-1}) +2\sum^{\frac{n}{2}-1}_{j=1}f(x_{2j}) +f(b) \right)\]](https://iu.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-21cdf9abc988c23a9091c4f953f02d3f_l3.png)

where ![]() .

.

Derivation is as follows:

The truncation error ![]() for

for ![]() is

is

Let us conclude this section with an illustrative example.

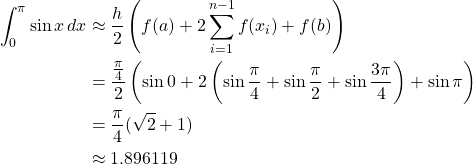

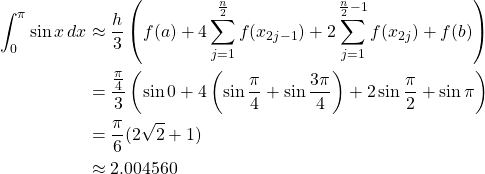

Example. Estimate ![]() using the composite trapezoidal rule and the composite Simpson’s rule, with

using the composite trapezoidal rule and the composite Simpson’s rule, with ![]() , and determine error bounds.

, and determine error bounds.

In both cases, ![]() , since

, since ![]() .

.

1. Composite Trapezoidal Rule

2. Composite Simpson’s Rule

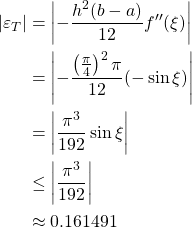

3. Error Bounds Determination

The error of approximation using the composite trapezoidal rule is

The error of approximation using the composite Simpson’s rule is

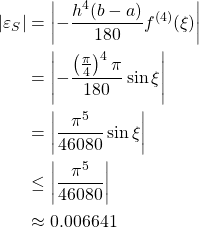

We confirm the actual errors computed below are within the error bounds.

Note the error is much smaller when using composite Simpson’s rule than the composite trapezoidal rule. This is in line with our intuitive understanding from the graphical illustration. Indeed, increasing ![]() would result in a smaller error, and taking

would result in a smaller error, and taking ![]() to infinity makes it essentially a regular integration.

to infinity makes it essentially a regular integration.