27 Vector Spaces

In the introduction, we discussed the modern understanding of linear systems as vector spaces. To begin with, let us formally define a vector space accompanied with axioms.

Definition. (Vector Space)

A vector space} is a set ![]() upon which the operations, addition} and scalar multiplication} are defined, subject to the following axioms:

upon which the operations, addition} and scalar multiplication} are defined, subject to the following axioms:

For all ![]() , and for all scalars

, and for all scalars ![]() ,

,

is a group.

is a group.

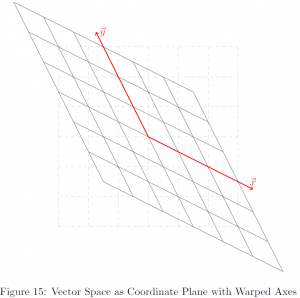

Do you get a sense of what a vector space is? In fact, the notion of vector space is quite familiar to us, but we often did not adapt the vector space perspective. Let us think of a 2-dimensional ![]() –

–![]() Cartesian coordinate system. It is interesting to see any coordinate

Cartesian coordinate system. It is interesting to see any coordinate ![]() can be seen as a vector space spanned by

can be seen as a vector space spanned by ![]() and

and ![]() . That is,

. That is,

![]()

Euclidean Space ![]()

The example we discussed was the ![]() –

–![]() Cartesian coordinate system in the light of 2-dimensional vector space, i.e.

Cartesian coordinate system in the light of 2-dimensional vector space, i.e. ![]() . In fact, this can be expanded to the

. In fact, this can be expanded to the ![]() –

–![]() –

–![]() Cartesian coordinate system or a 3-dimensional vector space

Cartesian coordinate system or a 3-dimensional vector space ![]() , and generalized to

, and generalized to ![]() -dimensional vector space

-dimensional vector space ![]() for

for ![]() , though not easily visualizable. We call this a Euclidean space. For intuitive exploration, we limit our focus to low-dimensional spaces such as

, though not easily visualizable. We call this a Euclidean space. For intuitive exploration, we limit our focus to low-dimensional spaces such as ![]() and

and ![]() in this summary.

in this summary.

Let us reconsider the example ![]() . The vectors in the spanning set coincided with the unit vector on

. The vectors in the spanning set coincided with the unit vector on ![]() – and

– and ![]() -axis, respectively. However, it need not be a case. That is, so long as at least two vectors of non-zero magnitude with non-zero angle are included in the set, the set can span the whole

-axis, respectively. However, it need not be a case. That is, so long as at least two vectors of non-zero magnitude with non-zero angle are included in the set, the set can span the whole ![]() . Let us illustrate with an example.

. Let us illustrate with an example.

Example. Show that ![]() , where

, where ![]() .

.

Expressing ![]() in a parametric vector form, we have, for all

in a parametric vector form, we have, for all ![]()

![]()

Let ![]() , and choose

, and choose ![]() .

.

Then, there exists ![]() such that

such that ![]() .

.

Therefore, ![]() .

.

Now, choose ![]() .

.

Then, there exists ![]() such that

such that ![]() .

.

Therefore, ![]() .

.

We omit the rest of the proof, and conclude ![]() , where

, where ![]() .

.

Though we have not provided a complete proof, we believe the reader, at least intuitively, saw the possibility of spanning the whole ![]() given 2 vectors of not a scalar multiple of each other. Also, note that the continuity or the completeness axiom of

given 2 vectors of not a scalar multiple of each other. Also, note that the continuity or the completeness axiom of ![]() is embedded in our proof.

is embedded in our proof.

Subspace

Now, we move onto subspace, a subset of a vector space. Formal definition is as follows:

Definition. (Subspace)

Given a vector space ![]() , a subspace

, a subspace ![]() is a subset of

is a subset of ![]() such that

such that

is closed under addition

is closed under addition is closed under scalar multiplication

is closed under scalar multiplication

In short, a subspace can be thought of a subset of a vector space containing the origin, denoted by ![]() . However, note that a subspace is defined on the space of the same dimension. That is,

. However, note that a subspace is defined on the space of the same dimension. That is,

![]()

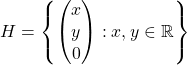

For example, a subset  is essentially an

is essentially an ![]() –

–![]() plane, yet is defined on

plane, yet is defined on ![]() , and thus a subset of

, and thus a subset of ![]() . Again, in fact,

. Again, in fact, ![]() .

.

Null Space and Column Space

We shall conclude this chapter introducing 2 other important concepts, null space and column space. In short, a null space is a set of solutions where ![]() . That is,

. That is,

Definition. (Null Space)

For a linear system ![]() , the null space of

, the null space of ![]() is

is

![]()

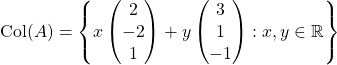

Column space is another term for a spanned set whose element is every column of a given matrix. That is,

Definition. (Column Space)

The column space of ![]() is

is

![]()

Let us illustrate with an example.

.

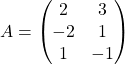

.1. ![]()

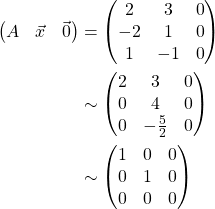

Let us row-reduce the augmented matrix of ![]() . Then,

. Then,

Therefore, there only exists a trivial answer ![]() , where

, where ![]() .

.

Therefore,

![]()

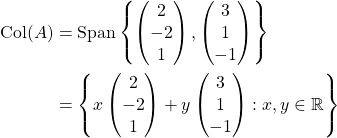

2. ![]()

By definition,

Therefore, ![]() and

and  .

.

Note that both ![]() and

and ![]() are subspaces of

are subspaces of ![]() , while the null space is implicitly defined, and the column space explicitly. Also, note that

, while the null space is implicitly defined, and the column space explicitly. Also, note that ![]() is in fact equivalent to the kernel of the given linear transformation. Recall the definition of kernel is a collection of elements whose image is an identity element in the range, in this case

is in fact equivalent to the kernel of the given linear transformation. Recall the definition of kernel is a collection of elements whose image is an identity element in the range, in this case ![]() .

.